If you are using data or code from this project, please cite the following paper. Thank you!

Kügler, D; Sehring, J; Stefanov, A; Stenin, I; Kristin, J; Klenzner, T; Schipper, J; Mukhopadhyay, A (2020): i3PosNet: Instrument Pose Estimation from X-Ray in temporal bone surgery. IPCAI 2020 and IJCARS 15(7), 1137-1145, Springer, doi: 10.1007/s11548-020-02157-4

Reception

I am extremely honored to have received the “IPCAI 2020 Best Machine Learning Paper Award” sponsored by nVidia. It is very rewarding to see the product of many years of work being rewarded to significantly.

Video presentation

Paper

i3PosNet: Instrument Pose Estimation from X-Ray in temporal bone surgery

Abstract

Purpose

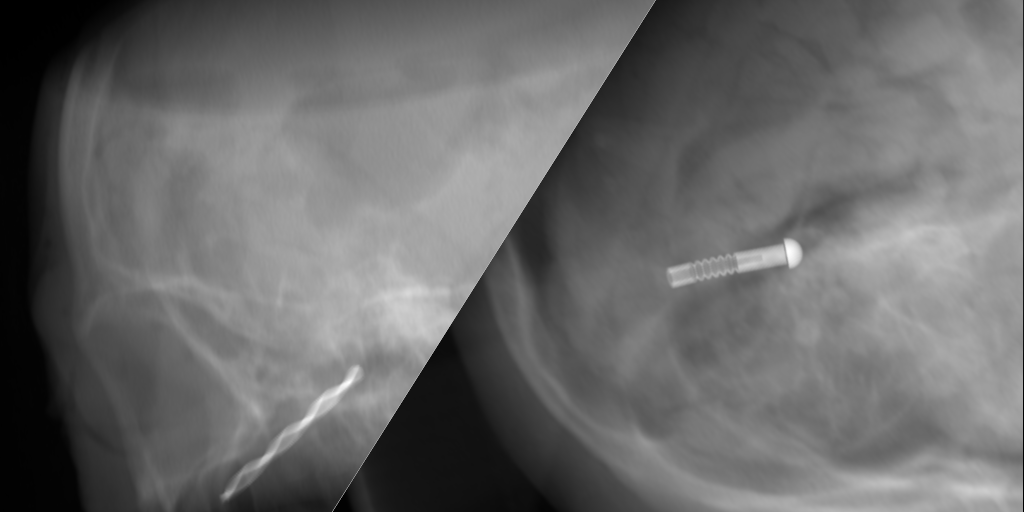

Accurate estimation of the position and orientation (pose) of surgical instruments is crucial for delicate minimally invasive temporal bone surgery. Current techniques lack in accuracy and/or line-of-sight constraints (conventional tracking systems) or expose the patient to prohibitive ionizing radiation (intra-operative CT). A possible solution is to capture the instrument with a c-arm at irregular intervals and recover the pose from the image.

Methods

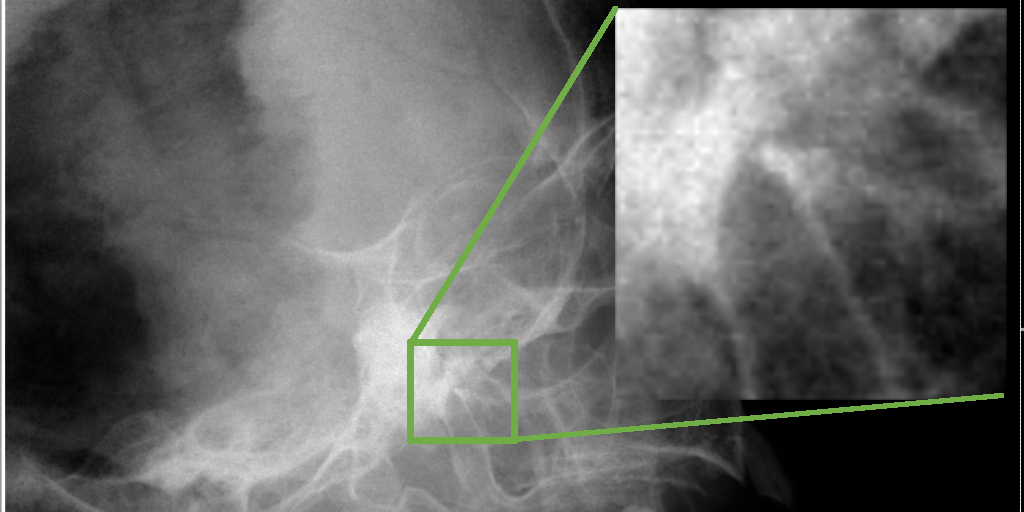

i3PosNet infers the position and orientation of instruments from images using a pose estimation network. Said framework considers localized patches and outputs pseudo-landmarks. The pose is reconstructed from pseudo-landmarks by geometric considerations.

Results

We show i3PosNet reaches errors <0.05 mm. It outperforms conventional image registration-based approaches reducing average and maximum errors by at least two thirds. i3PosNet trained on synthetic images generalizes to real X-rays without any further adaptation.

Conclusion

The translation of deep learning-based methods to surgical applications is difficult, because large representative datasets for training and testing are not available. This work empirically shows sub-millimeter pose estimation trained solely based on synthetic training data.

License

The datasets and the paper are shared under the terms of the Creative Commons ShareAlike license (CC BY-SA 4.0) and a Creative Commons Attribution 4.0 International License (CC BY 4.0), respectively.

We provide the code under the terms of the Apache 2.0 License. We ask, that you reference our work in any derivative work by citing our paper.

Code

Datasets

Dataset A: Synthetic

Archives of DICOM and projection description files

- Archive of training data (5.4 GB)

- Archive of validation and testing data (1.1 GB)

Dataset B: Surgical Tools

Archives of DICOM and projection description files

- Archive of training data (9.9 GB)

- Archive of validation and testing data (1.9 GB)

Dataset C: Real x-rays

Archive of validation and testing data (299 MB)

Models

We will make models available soon.